0. 前言

最近无事,在想着做一些工作。正好碰巧看到了yuanguobin01作者写的Lego-Loam的改进思路系列文章,这部分看完后遗憾于作者仅仅提供了一些初步的设想,而没有系统的学习代码,为此本文打算从作者提出的几个改进点来给出自己实现的策略思路。

1. 二维轮式里程计+IMU = 三维里程计 替换 原本3D激光前端里程计

这部分作者说通过二维里程计提供位移 + IMU航姿模块提供三向角度 投影成三维轮式IMU里程计 算率很低,实现很方便。很适合三维轮式里程计的操作。为此本文直接给出geometry_msgs::TwistStamped部分的操作,个人感觉使用IMU中值滤波。能够使整个结构更加紧凑。

void wheelHandler(const geometry_msgs::TwistStampedConstPtr &wheel_msg)

{

using Eigen::Vector3d;

if (wheel_msg->header.stamp.toSec() <= last_wheel_t)

{

ROS_WARN("wheel message in disorder!");

return;

}

double t = wheel_msg->header.stamp.toSec();

last_wheel_t = t;

double vx = wheel_msg->twist.linear.x;

double vy = wheel_msg->twist.linear.y;

double vz = wheel_msg->twist.linear.z;

double rx = wheel_msg->twist.angular.x;

double ry = wheel_msg->twist.angular.y;

double rz = wheel_msg->twist.angular.z;

Vector3d vel(vx, vy, vz);

Vector3d gyr(rx, ry, rz);

inputWheel(t, vel, gyr);

if (init_wheel)

{

latest_time = t;

init_wheel = 0;

return;

}

double dt = t - latest_time;

latest_time = t;

tmp_Q = tmp_Q * Utility::deltaQ(gyr * dt);

tmp_P = tmp_P + tmp_Q.toRotationMatrix() * vel * dt;

tmp_V = vel;

nav_msgs::Odometry wheelOdometry;

wheelOdometry.header.frame_id = "/camera_init";

wheelOdometry.child_frame_id = "/laser_odom";

wheelOdometry.header.stamp = ros::Time().fromSec(t);

wheelOdometry.pose.pose.orientation.x = tmp_Q.x();

wheelOdometry.pose.pose.orientation.y = tmp_Q.y();

wheelOdometry.pose.pose.orientation.z = tmp_Q.z();

wheelOdometry.pose.pose.orientation.w = tmp_Q.w();

wheelOdometry.pose.pose.position.x = tmp_P.x();

wheelOdometry.pose.pose.position.y = tmp_P.y();

wheelOdometry.pose.pose.position.z = tmp_P.z();

pubWheelOdometry.publish(wheelOdometry);

geometry_msgs::PoseStamped wheelPose;

wheelPose.header = wheelOdometry.header;

wheelPose.pose = wheelOdometry.pose.pose;

wheelPath.header.stamp = wheelOdometry.header.stamp;

wheelPath.poses.push_back(wheelPose);

wheelPath.header.frame_id = "/camera_init";

pubWheelPath.publish(wheelPath);

if (saveWheelOdo) {

std::ofstream founW("xxx/results/wheel_odo.txt",

std::ios::app);

founW.setf(std::ios::fixed, std::ios::floatfield);

founW.precision(5);

founW << wheelOdometry.header.stamp.toSec() << " ";

founW.precision(5);

founW << wheelOdometry.pose.pose.position.x << " "

<< wheelOdometry.pose.pose.position.y << " "

<< wheelOdometry.pose.pose.position.z << " "

<< wheelOdometry.pose.pose.orientation.x << " "

<< wheelOdometry.pose.pose.orientation.y << " "

<< wheelOdometry.pose.pose.orientation.z << " "

<< wheelOdometry.pose.pose.orientation.w << std::endl;

founW.close();

}

return;

}

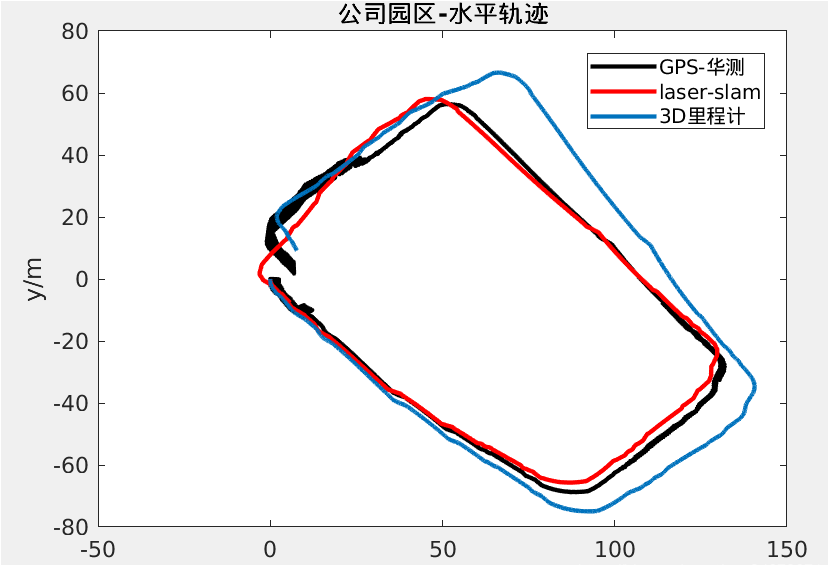

2. 增加GPS模块

该部分作者说GPS因子的添加着重提高建图精度和长时间的鲁棒性,也可以用做回环检测。这部分的确能够给LEGO-LOAM系统带来良好的收益。这部分我们可以先看一下坐标系转换

void laserOdometryHandler(const nav_msgs::Odometry::ConstPtr& laserOdometry)

{

// ********************************* 激光里程计 --> 全局位姿 ***********************************

// 0. 北斗星通的惯导系为北东地,大地坐标转换函数的坐标系为东北天,这里现将惯导系作处理,转为东北天

// 将大地坐标的xyz和惯导输出的rpy统一到东北天坐标系下。

// 1. 激光系 --> 车体系:一个平移,不考虑惯导安装,因为可以直接从第一帧的RTK消息中,得知第一帧的车体系到东北天坐标系下的变换

// 和ros标准保持一致,默认将前进方向设置为x,向上为z

// 2. 车体系 --> 东北天:坐标变换,利用第一帧的xyzrpy变换得到。

// ************************************************************************************************

Eigen::Isometry3d mLidar = Eigen::Isometry3d::Identity(); // 将当前的位姿赋值给mBody

mLidar.rotate(Eigen::Quaterniond(

laserOdometry2.pose.pose.orientation.x,

laserOdometry2.pose.pose.orientation.y,

laserOdometry2.pose.pose.orientation.z,

laserOdometry2.pose.pose.orientation.w

));

mLidar.translate(Eigen::Vector3d(

laserOdometry2.pose.pose.position.x, // 直接将激光剪掉

laserOdometry2.pose.pose.position.y,

laserOdometry2.pose.pose.position.z

));

// 1. mLidar --> mBody

Eigen::Isometry3d tLidar2Body = Eigen::Isometry3d::Identity();//平移

tLidar2Body.translate(Eigen::Vector3d(1.83,0,0));//旋转

Eigen::Isometry3d mBody = tLidar2Body*mLidar;

// 2. mBody --> ENU

Eigen::Isometry3d mENU = tBody2ENU*mBody;

// ********************************* 全局位姿 --> 激光里程计 ***********************************

// Body到ENU原点,因此是负数

mENU.translate(Eigen::Vector3d(RTK->x,RTK->y,RTK->Ati);

// RTK的RPY测量的是车体相对于ENU坐标系的旋转

mENU.rotate(Eigen::Quaterniond(Eigen::AngleAxisd(RTK->yaw*PI/180.0,Eigen::Vector3d::UnitZ())*

Eigen::AngleAxisd(RTK->pitch*PI/180.0,Eigen::Vector3d::UnitY())*

Eigen::AngleAxisd(RTK->roll*PI/180.0,Eigen::Vector3d::UnitX()));//欧拉角转四元数

// 1. ENU --> mBody

Eigen::Isometry3d mBody = tBody2ENU.inverse()*mENU;

// 2. mBody --> mLidar

Eigen::Isometry3d mLidar = tLidar2Body.inverse()*mBody;

}

// 涉及到三个坐标系:gps,body,lidar

// body和lidar差一个平移

void rtkHandler(const bdxt::rtk::ConstPtr& RTK)

{

if(!is_gps_init){ // gps初始值记录

if(RTK->navStatus == 4 && RTK->rtkStatus == 5){

// qBody2ENU计算车体坐标系到gps(东北天)坐标系的旋转

// 而可以获取的imu读数是惯导系下的值(北东地),因此需要作变换:BODY-->北东地-->东北天

// 涉及两个四元数:qBODY2NED,qNED2ENU

Eigen::Vector3d vBody2ENU; // gps初始化位姿:x,y,z,pitch,yaw,roll,同时也是激光原点坐标系和大地坐标系的静态变换

Eigen::Quaterniond qBody2ENU; // body到ENU的旋转变换

// RTK的RPY测量的是车体相对于ENU坐标系的旋转

Eigen::Quaterniond qBodyNED_; // Body相对于NED坐标系下的旋转,实际上是NED2BODY

qBodyNED_ = Eigen::AngleAxisd(RTK->yaw*PI/180.0,Eigen::Vector3d::UnitZ())*

Eigen::AngleAxisd(RTK->pitch*PI/180.0,Eigen::Vector3d::UnitY())*

Eigen::AngleAxisd(RTK->roll*PI/180.0,Eigen::Vector3d::UnitX());//欧拉角转四元数

Eigen::Quaterniond qBody2NED = qBodyNED_.conjugate();//反转四元数

// 东北天 --> 北东地,先z轴旋转PI/2,再x轴旋转PI,注意顺序

Eigen::Quaterniond qNED2ENU;

qNED2ENU = Eigen::AngleAxisd(PI/2,Eigen::Vector3d::UnitZ())*

Eigen::AngleAxisd(PI,Eigen::Vector3d::UnitX());

//注意顺序,从右到左变换

qBody2ENU = qNED2ENU*qBody2NED;

// Body到ENU原点,因此是负数

vBody2ENU[0] = -RTK->x; // 如果使用第一帧为坐标原点坐标转换,设置为0

vBody2ENU[1] = -RTK->y; // 如果使用第一帧为坐标原点坐标转换,设置为0

vBody2ENU[2] = -RTK->Ati; // 如果使用第一帧为坐标原点坐标转换,设置为0

// 得到变换矩阵

tBody2ENU.rotate(qBody2ENU);

tBody2ENU.translate(vBody2ENU);

is_gps_init = true;

}

else{

ROS_INFO("bad RTK status\n");

}

}

}

在转换后我们可以通过EKF或者Ceres带入进去,并在前端实现优化。而作者说的后端部分优化可以参考下面的部分文档,基本上就是在GTSAM中加入GPS因子

isamCurrentEstimate = isam->calculateEstimate();//这部分可以替换为RTK,不需要实时融合传入。如果认为RTK精度足够的话

double recentOptimizedX = lastOptimizedPose.translation().x();

double recentOptimizedY = lastOptimizedPose.translation().y();

double bigNoiseTolerentToXY = 1000000000.0; // 1e9

double gpsAltitudeNoiseScore = 250.0; // if height is misaligned after loop clsosing, use this value bigger

gtsam::Vector robustNoiseVector3(3); // gps factor has 3 elements (xyz)

robustNoiseVector3 << bigNoiseTolerentToXY, bigNoiseTolerentToXY, gpsAltitudeNoiseScore; // means only caring altitude here. (because LOAM-like-methods tends to be asymptotically flyging)

robustGPSNoise = gtsam::noiseModel::Robust::Create(

gtsam::noiseModel::mEstimator::Cauchy::Create(1), // optional: replacing Cauchy by DCS or GemanMcClure is okay but Cauchy is empirically good.

gtsam::noiseModel::Diagonal::Variances(robustNoiseVector3) );

// 找到最合适的GPS信息,这里速度够慢可以不对齐

double eps = 0.1; // 在eps内找到一个GPS主题

while (!gpsBuf.empty()) {

auto thisGPS = gpsBuf.front();

auto thisGPSTime = thisGPS->header.stamp.toSec();

if( abs(thisGPSTime - timeLaserOdometry) < eps ) {

currGPS = thisGPS;

hasGPSforThisKF = true;

break;

} else {

hasGPSforThisKF = false;

}

gpsBuf.pop();

}

mBuf.unlock();

// gps factor

const int curr_node_idx = keyframePoses.size() - 1; // 因为索引从0开始(实际上这个索引可以是任何数字,但为了简单的实现,我们遵循顺序索引)

if(hasGPSforThisKF) {

double curr_altitude_offseted = currGPS->altitude - gpsAltitudeInitOffset;

mtxRecentPose.lock();

gtsam::Point3 gpsConstraint(recentOptimizedX, recentOptimizedY, curr_altitude_offseted); // 在这个例子中,只调整高度(对于x和y,设置了很大的噪音)

mtxRecentPose.unlock();

gtSAMgraph.add(gtsam::GPSFactor(curr_node_idx, gpsConstraint, robustGPSNoise));

cout << "GPS factor added at node " << curr_node_idx << endl;

}

initialEstimate.insert(curr_node_idx, poseTo);

!--https://github.com/gisbi-kim/SC-A-LOAM/blob/main/src/laserPosegraphOptimization.cpp--

3. Scan-Context加入

这部分可以参考我之前写的系列文章。

文中也给出在LOAM系列中回环检测主要存在有四种方法

- 传统的领域距离搜索+ICP匹配

- 基于scan context系列的粗匹配+ICP精准匹配的回环检测

- 基于scan context的回环检测

- 基于Intensity scan context+ICP的回环检测

下面是Intensity scan context的部分操作

void mapOptimization::performLoopClosure(void) {

if (cloudKeyPoses3D->points.empty() == true)

return;

// try to find close key frame if there are any

if (potentialLoopFlag == false) {

if (detectLoopClosure() == true) {

std::cout << std::endl;

potentialLoopFlag = true; // find some key frames that is old enough or close enough for loop closure

timeSaveFirstCurrentScanForLoopClosure = timeLaserOdometry;

}

if (potentialLoopFlag == false) {

return;

}

}

// reset the flag first no matter icp successes or not

potentialLoopFlag = false;

// *****

// Main

// *****

// make common variables at forward

float x, y, z, roll, pitch, yaw;

Eigen::Affine3f correctionCameraFrame;

float noiseScore = 0.5; // constant is ok...

gtsam::Vector Vector6(6);

Vector6 << noiseScore, noiseScore, noiseScore, noiseScore, noiseScore, noiseScore;

constraintNoise = noiseModel::Diagonal::Variances(Vector6);

robustNoiseModel = gtsam::noiseModel::Robust::Create(

gtsam::noiseModel::mEstimator::Cauchy::Create(1), // optional: replacing Cauchy by DCS or GemanMcClure

gtsam::noiseModel::Diagonal::Variances(Vector6)

); // - checked it works. but with robust kernel, map modification may be delayed (i.e,. requires more true-positive loop factors)

bool isValidRSloopFactor = false;

bool isValidSCloopFactor = false;

/*

* 1. RS loop factor (radius search)

* 将RS检测到的历史帧和当前帧匹配,求transform, 作为约束边

*/

if (RSclosestHistoryFrameID != -1) {

pcl::IterativeClosestPoint<PointType, PointType> icp;

icp.setMaxCorrespondenceDistance(100);

icp.setMaximumIterations(100);

icp.setTransformationEpsilon(1e-6);

icp.setEuclideanFitnessEpsilon(1e-6);

icp.setRANSACIterations(0);

// Align clouds

icp.setInputSource(RSlatestSurfKeyFrameCloud);

icp.setInputTarget(RSnearHistorySurfKeyFrameCloudDS);

pcl::PointCloud<PointType>::Ptr unused_result(new pcl::PointCloud<PointType>());

icp.align(*unused_result);

// 通过score阈值判定icp是否匹配成功

std::cout << "[RS] ICP fit score: " << icp.getFitnessScore() << std::endl;

if (icp.hasConverged() == false || icp.getFitnessScore() > historyKeyframeFitnessScore) {

std::cout << "[RS] Reject this loop (bad icp fit score, > " << historyKeyframeFitnessScore << ")"

<< std::endl;

isValidRSloopFactor = false;

} else {

std::cout << "[RS] The detected loop factor is added between Current [ " << latestFrameIDLoopCloure

<< " ] and RS nearest [ " << RSclosestHistoryFrameID << " ]" << std::endl;

isValidRSloopFactor = true;

}

// 这里RS检测成功,加入约束边

if (isValidRSloopFactor == true) {

correctionCameraFrame = icp.getFinalTransformation(); // get transformation in camera frame (because points are in camera frame)

pcl::getTranslationAndEulerAngles(correctionCameraFrame, x, y, z, roll, pitch, yaw);

Eigen::Affine3f correctionLidarFrame = pcl::getTransformation(z, x, y, yaw, roll, pitch);

// transform from world origin to wrong pose

Eigen::Affine3f tWrong = pclPointToAffine3fCameraToLidar(

cloudKeyPoses6D->points[latestFrameIDLoopCloure]);

// transform from world origin to corrected pose

Eigen::Affine3f tCorrect =

correctionLidarFrame * tWrong; // pre-multiplying -> successive rotation about a fixed frame

pcl::getTranslationAndEulerAngles(tCorrect, x, y, z, roll, pitch, yaw);

gtsam::Pose3 poseFrom = Pose3(Rot3::RzRyRx(roll, pitch, yaw), Point3(x, y, z));

gtsam::Pose3 poseTo = pclPointTogtsamPose3(cloudKeyPoses6D->points[RSclosestHistoryFrameID]);

gtsam::Vector Vector6(6);

std::lock_guard<std::mutex> lock(mtx);

gtSAMgraph.add(

BetweenFactor<Pose3>(latestFrameIDLoopCloure, RSclosestHistoryFrameID, poseFrom.between(poseTo),

robustNoiseModel));

isam->update(gtSAMgraph);

isam->update();

gtSAMgraph.resize(0);

}

}

/*

* 2. SC loop factor (scan context)

* SC检测成功,进行icp匹配

*/

if (SCclosestHistoryFrameID != -1) {

pcl::IterativeClosestPoint<PointType, PointType> icp;

icp.setMaxCorrespondenceDistance(100);

icp.setMaximumIterations(100);

icp.setTransformationEpsilon(1e-6);

icp.setEuclideanFitnessEpsilon(1e-6);

icp.setRANSACIterations(0);

// Align clouds

// Eigen::Affine3f icpInitialMatFoo = pcl::getTransformation(0, 0, 0, yawDiffRad, 0, 0); // because within cam coord: (z, x, y, yaw, roll, pitch)

// Eigen::Matrix4f icpInitialMat = icpInitialMatFoo.matrix();

icp.setInputSource(SClatestSurfKeyFrameCloud);

icp.setInputTarget(SCnearHistorySurfKeyFrameCloudDS);

pcl::PointCloud<PointType>::Ptr unused_result(new pcl::PointCloud<PointType>());

icp.align(*unused_result);

// icp.align(*unused_result, icpInitialMat); // PCL icp non-eye initial is bad ... don't use (LeGO LOAM author also said pcl transform is weird.)

std::cout << "[SC] ICP fit score: " << icp.getFitnessScore() << std::endl;

if (icp.hasConverged() == false || icp.getFitnessScore() > historyKeyframeFitnessScore) {

std::cout << "[SC] Reject this loop (bad icp fit score, > " << historyKeyframeFitnessScore << ")"

<< std::endl;

isValidSCloopFactor = false;

} else {

std::cout << "[SC] The detected loop factor is added between Current [ " << latestFrameIDLoopCloure

<< " ] and SC nearest [ " << SCclosestHistoryFrameID << " ]" << std::endl;

isValidSCloopFactor = true;

}

// icp匹配成功也加入约束边

if (isValidSCloopFactor == true) {

correctionCameraFrame = icp.getFinalTransformation(); // get transformation in camera frame (because points are in camera frame)

pcl::getTranslationAndEulerAngles(correctionCameraFrame, x, y, z, roll, pitch, yaw);

gtsam::Pose3 poseFrom = Pose3(Rot3::RzRyRx(roll, pitch, yaw), Point3(x, y, z));

gtsam::Pose3 poseTo = Pose3(Rot3::RzRyRx(0.0, 0.0, 0.0), Point3(0.0, 0.0, 0.0));

std::lock_guard<std::mutex> lock(mtx);

// gtSAMgraph.add(BetweenFactor<Pose3>(latestFrameIDLoopCloure, closestHistoryFrameID, poseFrom.between(poseTo), constraintNoise)); // original

gtSAMgraph.add(

BetweenFactor<Pose3>(latestFrameIDLoopCloure, SCclosestHistoryFrameID, poseFrom.between(poseTo),

robustNoiseModel)); // giseop

isam->update(gtSAMgraph);

isam->update();

gtSAMgraph.resize(0);

}

}

// flagging

aLoopIsClosed = true;

} // performLoopClosure

4. 添加一段距离的闭环约束

这部分我感觉有可能作者想要表达的意思是帧间纠正的问题,即为两点云帧之间的约束,而这部分其实就是在LEGO-LOAM部分上加入距离判断因子

void extractSurroundingKeyFrames(pcl::PointCloud<PointType>::Ptr cloudToExtract)

{

// vector: cloudToExtract有多少个位姿节点,就有多少帧点云数据

std::vector<pcl::PointCloud<PointType>> laserCloudCornerSurroundingVec;

std::vector<pcl::PointCloud<PointType>> laserCloudSurfSurroundingVec;

laserCloudCornerSurroundingVec.resize(cloudToExtract->size());

laserCloudSurfSurroundingVec.resize(cloudToExtract->size());

// extract surrounding map

#pragma omp parallel for num_threads(numberOfCores)

// 遍历提取到的位姿节点

for (int i = 0; i < (int)cloudToExtract->size(); ++i)

{

// 检查两个位姿节点的距离,并在一定范围内选取优化特征点

if (pointDistance(cloudToExtract->points[i], cloudKeyPoses3D->back()) > surroundingKeyframeSearchRadius+1)//生成局部地图0-surroundingKeyframeSearchRadius+1

continue;

// 取id,用来取姿态

int thisKeyInd = (int)cloudToExtract->points[i].intensity;

// 将提取的边沿点特征集合,变换到世界坐标系W

laserCloudCornerSurroundingVec[i] = *transformPointCloud(cornerCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);

// 将提取的平面点特征集合,变换到世界坐标系W

laserCloudSurfSurroundingVec[i] = *transformPointCloud(surfCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);

}

// fuse the map

/// 清空局部地图,再合并

laserCloudCornerFromMap->clear();

laserCloudSurfFromMap->clear();

// 遍历提取到的位姿节点

for (int i = 0; i < (int)cloudToExtract->size(); ++i)

{

// 合并边缘点和平面点特征,构成 世界坐标系W下的局部特征点云地图

*laserCloudCornerFromMap += laserCloudCornerSurroundingVec[i];

*laserCloudSurfFromMap += laserCloudSurfSurroundingVec[i];

}

/// 降采样

// Downsample the surrounding corner key frames (or map)

downSizeFilterCorner.setInputCloud(laserCloudCornerFromMap);

downSizeFilterCorner.filter(*laserCloudCornerFromMapDS);

laserCloudCornerFromMapDSNum = laserCloudCornerFromMapDS->size();

// Downsample the surrounding surf key frames (or map)

downSizeFilterSurf.setInputCloud(laserCloudSurfFromMap);

downSizeFilterSurf.filter(*laserCloudSurfFromMapDS);

laserCloudSurfFromMapDSNum = laserCloudSurfFromMapDS->size();

}

void mapOptimization::scan2MapOptimization() {

if (laserCloudCornerFromMapDSNum > 10 && laserCloudSurfFromMapDSNum > 100) {//这样即可代表降采样后特征点仍然足够

kdtreeCornerFromMap->setInputCloud(laserCloudCornerFromMapDS);

kdtreeSurfFromMap->setInputCloud(laserCloudSurfFromMapDS);

for (int iterCount = 0; iterCount < 10; iterCount++) {

laserCloudOri->clear();

coeffSel->clear();

cornerOptimization(iterCount);

surfOptimization(iterCount);

if (LMOptimization(iterCount) == true)

break;

}

// 迭代结束更新相关的转移矩阵

transformUpdate();

}

}

5. 参考链接

https://blog.csdn.net/qq_34672671/article/details/107542816?spm=1001.2014.3001.5501

https://www.guyuehome.com/34085

https://github.com/mohdomama/LIO-SAM

评论(3)

您还未登录,请登录后发表或查看评论