Tensorflow学习实战之VGG16猫狗大战迁移训练

继续跑深度学习Tensorflow的实战,今天的是VGG16的猫狗大战,整体结构框架由13层卷积和3层全连接层组成,对于对pthon不熟悉的我来说,整个搭建调试过程在于数据的写入处理,维度的变换,以及不同函数的灵活使用。

整个过程,在两个地方卡住,第一个是训练时中途进程中断,修改了不少参数,均没有效果,最终找到的问题是数据集出现了问题,最开始用的是微软官网下载的一个猫狗数据集,后来换数据集之后没有问题。第二个是最后测试的时候出现的问题,载入图片后,总提示维度有问题。

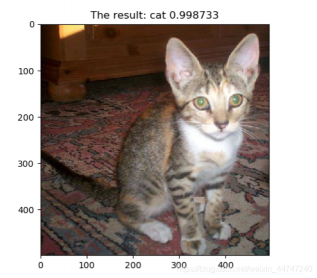

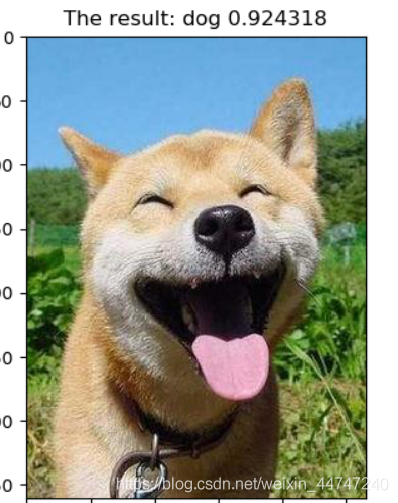

所谓迁移训练呢就是说,在别人训练好的权重基础上呢,进行自己的训练,从而获得适应自己所需任务的模型,结果如下:

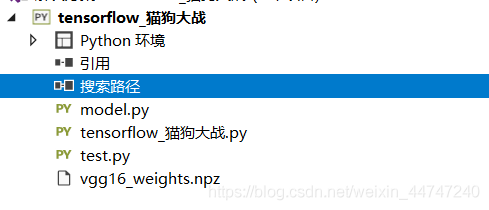

整体的框架是这样的:

model.py是用来装载搭建的卷积层框架,test.py用来最终结果的预测,猫狗大战用来跑训练,首先来看看16层卷积的搭建吧:

定义卷积、池化、全连接层,然后是搭建一个13层的卷积,和一个三层的全连接层,微调训练过程中,因为最终结果,只需要预测猫狗,class为2,所以只修改了最终一层全连接层,trainable=Ture

import tensorflow as tf

import numpy as np

import os

#模型定义

class vgg16:

def __init__(self, imgs):

#加入全局列表,把所需参数加载进类里

self.parameters=[]

#初始化

self.imgs=imgs

self.convlayers()

self.fc_layers()

#输出类别的概率

self.probs=tf.nn.softmax(self.fc8)

def saver(self):

return tf.train.Saver()

#定义卷积层

def conv(self,name,input_data,out_channel,trainable=False):

#获取通道数

in_channel=input_data.get_shape()[-1]

with tf.variable_scope(name):

#初始化

kernel=tf.get_variable('weights',[3,3,in_channel,out_channel],dtype=tf.float32,trainable=False)

biases=tf.get_variable('baises',[out_channel],dtype=tf.float32,trainable=False)

conv_res=tf.nn.conv2d(input_data,kernel,[1,1,1,1],padding='SAME')

res=tf.nn.bias_add(conv_res,biases)

out=tf.nn.relu(res,name=name)

self.parameters+=[kernel,biases]

return out

#定义全连接层

def fc(self,name,input_data,out_channel,trainable=True):

#获取维度

shape=input_data.get_shape().as_list()

if len(shape)==4:

size=shape[-1]*shape[-2]*shape[-3]

else :size=shape[1]

#数据展开

input_data_flat=tf.reshape(input_data,[-1,size])

with tf.variable_scope(name):

#初始化

weights=tf.get_variable('weights',shape=[size,out_channel],dtype=tf.float32,trainable=trainable)

biases=tf.get_variable('baises',shape=[out_channel],dtype=tf.float32,trainable=trainable)

res=tf.matmul(input_data_flat,weights)

out=tf.nn.relu(tf.nn.bias_add(res,biases))

self.parameters+=[weights,biases]

return out

#定义池化

def maxpool(self,name,input_data):

out=tf.nn.max_pool(input_data,[1,2,2,1],[1,2,2,1],padding='SAME',name=name)

return out

#卷积堆叠

def convlayers(self):

#cov1

self.conv1_1=self.conv('conv1_1',self.imgs,64,trainable=False)

self.conv1_2=self.conv('conv1_2',self.conv1_1,64,trainable=False)

self.pool1=self.maxpool('poolre1',self.conv1_2)

#cov2

self.conv2_1=self.conv('conv2_1',self.pool1,128,trainable=False)

self.conv2_2=self.conv('conv2_2',self.conv2_1,128,trainable=False)

self.pool2=self.maxpool('pool1',self.conv2_2)

#cov3

self.conv3_1=self.conv('conv3_1',self.pool2,256,trainable=False)

self.conv3_2=self.conv('conv3_2',self.conv3_1,256,trainable=False)

self.conv3_3=self.conv('conv3_3',self.conv3_2,256,trainable=False)

self.pool3=self.maxpool('pool3',self.conv3_3)

#cov4

self.conv4_1=self.conv('conv4_1',self.pool3,512,trainable=False)

self.conv4_2=self.conv('conv4_2',self.conv4_1,512,trainable=False)

self.conv4_3=self.conv('conv4_3',self.conv4_2,512,trainable=False)

self.pool4=self.maxpool('pool4',self.conv4_3)

#cov5

self.conv5_1=self.conv('conv5_1',self.pool4,512,trainable=False)

self.conv5_2=self.conv('conv5_2',self.conv5_1,512,trainable=False)

self.conv5_3=self.conv('conv5_3',self.conv5_2,512,trainable=False)

self.pool5=self.maxpool('pool5',self.conv5_3)

#全连接层

def fc_layers(self):

self.fc6=self.fc('fc1',self.pool5,4096,trainable=False)

self.fc7=self.fc('fc2',self.fc6,4096,trainable=False)

#要进行微调,trainable为true

self.fc8=self.fc('fc3',self.fc7,2,trainable=True)

#载入权重

def load_weights(self,weight_file,sess):

weights=np.load(weight_file)

keys=sorted(weights.keys())

for i,k in enumerate(keys):

if i not in[30,31]:

sess.run(self.parameters[i].assign(weights[k]))

print("weights loading......")

接下来是训练过程:

分成了数据集的读取,预处理,通过不同文件夹进行分类,独热编码转换,以及参数设置及进行迭代训练

#VGG16

import tensorflow as tf

import numpy as np

import os

from time import time

import utils

import model

#数据输入

def get_file(file_dir):

images=[]

temp=[]

#对不同文件夹分类

for root,sub_folders,files in os.walk(file_dir):

for name in files:

images.append(os.path.join(root,name))

for name in sub_folders:

temp.append(os.path.join(root,name))

labels=[]

for one_folder in temp:

n_img=len(os.listdir(one_folder))

letter=one_folder.split('/')[-1]

if letter=='Cat':

labels=np.append(labels,n_img*[0])

else :

labels=np.append(labels,n_img*[1])

temp=np.array([images,labels])

temp=temp.transpose()

np.random.shuffle(temp)

image_list=list(temp[:,0])

label_list=list(temp[:,1])

label_list=[int(float(i)) for i in label_list]

return image_list,label_list

def get_batch(image_list,label_list,img_width,img_height,batch_size,capacity):

image=tf.cast(image_list,tf.string)

label=tf.cast(label_list,tf.int32)

input_queue=tf.train.slice_input_producer([image,label])

label=input_queue[1]

image_contents=tf.read_file(input_queue[0])

image=tf.image.decode_jpeg(image_contents,channels=3)

image= tf.image.resize_images(image, [224, 224], method=tf.image.ResizeMethod.NEAREST_NEIGHBOR)

image_batch,label_batch=tf.train.batch([image,label],batch_size=batch_size,num_threads=64,capacity=capacity)

label_batch=tf.reshape(label_batch,[batch_size])

return image_batch,label_batch

#转换独热编码

def onehot(labels):

n_sample=len(labels)

n_class=max(labels)+1

onehot_labels=np.zeros((n_sample,n_class))

onehot_labels[np.arange(n_sample),labels]=1

return onehot_labels

batch_size=10

capacity=256#存储容量

#VGG预训练是减掉的均值

means=[123.68,116.779,103.939]

img_width=224

img_height=224

start_time=time()

xs,ys =get_file('D:/demo/tensorflow_猫狗大战/tensorflow_猫狗大战/data/')

image_batch,label_batch=get_batch(xs,ys,img_width,img_height,batch_size,capacity)

print(len(xs),len(ys))

x=tf.placeholder(tf.float32,[None,224,224,3])

y=tf.placeholder(tf.int32,[None,2])

vgg=model.vgg16(x)

fc8_finetuining=vgg.probs

loss_function=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=fc8_finetuining,labels=y))

optimizer=tf.train.GradientDescentOptimizer(learning_rate=0.001).minimize(loss_function)

sess=tf.Session()

sess.run(tf.global_variables_initializer())

vgg.load_weights('vgg16_weights.npz',sess)

saver=tf.train.Saver()

coord= tf.train.Coordinator()

threads=tf.train.start_queue_runners(coord=coord,sess=sess)

epoch_start_time=time()

for i in range (100):

print('开始训练......')

images,labels=sess.run([image_batch,label_batch])

labels=onehot(labels)

sess.run(optimizer,feed_dict={x:images,y:labels})

loss=sess.run(loss_function,feed_dict={x:images,y:labels})

print("loss:%f"%loss)

epoch_end_time=time()

print('time:',(epoch_end_time-epoch_start_time))

epoch_start_time=epoch_end_time

if(i+1)%20==0:

saver.save(sess,os.path.join('./model/','epoch{:06d}.ckpt'.format(i+1)))

print("____epoch%d finish"%(i+1))

saver.save(sess,'./model/')

print('finish,alltime:',time()-start_time())

coord.request_stop()

coord.join(threads)

最后是检测部分的函数。

import tensorflow as tf

import numpy as np

from imageio import imread

import model as model

from PIL import Image

import matplotlib.pyplot as plt

tf.reset_default_graph()

means=[123.68,116.779,103.939]

x=tf.placeholder(tf.float32,[None,224,224,3])

with tf.Session() as sess:

vgg=model.vgg16(x)

fc8_finetuining=vgg.probs

saver=tf.train.Saver()

print('restoring...')

saver.restore(sess,'./model/')

filepath='./1116.jpg'

image_raw_data = tf.gfile.FastGFile(filepath,'rb').read()

img_data = tf.image.decode_jpeg(image_raw_data)

plt.imshow(img_data.eval())

image = tf.image.resize_images(img_data, [224, 224], method=tf.image.ResizeMethod.NEAREST_NEIGHBOR)

img = image.eval()

print(img.shape)

image = tf.expand_dims(image,0)

print(image.shape)

#for c in range(3):

# image[:,:,c] -= means[c]

prob=sess.run(fc8_finetuining,feed_dict={x:[img]})

max_index=np.argmax(prob)

if max_index==0:

plt.title('The result: cat %.6f'%prob[:,0])

plt.show()

else:

plt.title('The result: dog %.6f'%prob[:,1])

plt.show()

全程手敲,几近崩溃,趁年轻读点书,不想做小工了,算了不说了,我今晚还有两车砖要搬…

评论(1)

您还未登录,请登录后发表或查看评论